Exploring the journey of computer history reveals key milestones, from ancient calculators to modern systems․ Tracing this evolution inspires innovation and appreciation for technological advancements․

1․1․ Overview of Computer Evolution

The evolution of computers traces a remarkable journey from ancient counting tools to sophisticated digital machines․ Early mechanical devices like the abacus laid the groundwork for more complex calculators․ The transition to electromechanical and electronic computers in the 20th century revolutionized computing․ Key inventions, such as the integrated circuit and microprocessor, drove miniaturization and enhanced performance․ This progression transformed computers from bulky, specialized instruments into indispensable, everyday technologies, profoundly impacting various fields and reshaping how humanity lives, works, and innovates․

1․2․ Importance of Studying Computer History

Studying the history of computers provides invaluable insights into technological progress and innovation․ It reveals how early challenges were overcome, shaping modern computing․ Understanding the evolution of ideas and inventions fosters appreciation for the complexity of digital systems․ This knowledge also highlights the contributions of pioneers, inspiring future advancements․ For students and professionals alike, exploring computer history offers a foundation for understanding current technologies and anticipating future developments․ It bridges the gap between past innovations and contemporary advancements, enriching one’s perspective on the ever-evolving digital world․

1․3․ Key Milestones in Computer Development

Key milestones in computer development include the invention of the abacus, the creation of mechanical computers, and the transition to electronic systems․ The development of ENIAC marked the first large-scale electronic computer, while innovations like the microprocessor and integrated circuits revolutionized computing․ These advancements laid the foundation for modern computing, enabling smaller, faster, and more powerful machines․ Each milestone represents a significant leap forward, showcasing human ingenuity and the relentless pursuit of technological progress․

Early Mechanical Computing Devices

Mechanical computing devices began with the abacus, evolving into complex machines like the Difference Engine․ These innovations laid the groundwork for modern computational technology and problem-solving methods․

2․1․ The Abacus: The First Mechanical Calculator

The abacus, invented around 3500 BC, is recognized as the first mechanical calculator․ Its wooden frame with rods and sliding beads allowed basic arithmetic operations, simplifying counting and trade․ Divided into “heaven” (upper) and “earth” (lower) sections, it represented numbers through bead positions․ This ancient tool laid the foundation for mechanical computing, demonstrating early human ingenuity in problem-solving․ Its simplicity and effectiveness made it a cornerstone in the evolution of computational devices, influencing later innovations in mathematics and technology․

2․2․ The Development of Mechanical Computers

Mechanical computers evolved significantly after the abacus, with devices like the Difference Engine and Analytical Engine showcasing programmable capabilities․ These machines, pioneered by Charles Babbage, introduced concepts of automation and data processing․ The transition from manual calculation to mechanical computation marked a pivotal shift, enabling complex mathematical tasks with greater accuracy․ This era laid the groundwork for future innovations, blending mechanical precision with early ideas of programmability, and setting the stage for the eventual development of electronic computing technologies in the 20th century․

2․3․ The Difference Engine and Analytical Engine

Charles Babbage’s Difference Engine, designed in the early 19th century, was a mechanical computer for automating mathematical calculations․ Although never completed, it influenced later innovations․ The Analytical Engine, proposed later, was a groundbreaking concept featuring punched cards, a central processing unit, and programmability․ While also unbuilt during Babbage’s lifetime, its design laid the theoretical foundation for modern computers․ These engines symbolize the transition from mechanical to programmable computing, showcasing Babbage’s visionary contributions to computer science and technology․

Electromechanical Computers

Electromechanical computers bridged mechanical and electronic eras, enhancing speed and reliability․ They played a crucial role in wartime calculations, setting the stage for fully electronic systems․

3․1․ The Harvard Mark-I: A Breakthrough in Computing

The Harvard Mark-I, completed in 1944, was a revolutionary electromechanical computer weighing over 5 tons․ Designed by Howard Aiken and built by IBM, it combined mechanical relays with electronic components․ This large-scale machine could perform complex calculations automatically, making it a significant advancement for military and scientific applications․ The Mark-I introduced programmability and modular design, laying the groundwork for future electronic computers․ Its development marked a pivotal moment in computing history, bridging the gap between mechanical and electronic systems․

3․2․ The Atanasoff-Berry Computer (ABC)

The Atanasoff-Berry Computer (ABC) was the first electronic digital computer, invented by John Vincent Atanasoff and Clifford Berry in the late 1930s․ It used vacuum tubes to perform calculations and introduced binary arithmetic, making it a pioneering device․ The ABC was designed to solve linear algebra problems efficiently and incorporated a memory system for storing data․ Although it was never patented, the ABC influenced later computers like ENIAC․ Its development marked a significant leap in computing technology, showcasing the potential of electronic systems; The ABC’s legacy lies in its innovative design and contribution to the evolution of modern computers․

3․3․ The Evolution of Electromechanical Machines

Electromechanical machines bridged the gap between mechanical and electronic computers, combining mechanical parts with electrical systems․ Devices like the Harvard Mark-I and Z3 showcased this hybrid approach, offering improved speed and accuracy․ These machines used relays and switches to process data, enabling complex calculations․ Their development laid the groundwork for fully electronic computers by demonstrating the potential of integrating electrical components․ Despite being bulky and less efficient than later models, electromechanical machines played a crucial role in advancing computing technology during the mid-20th century․

The First Electronic Computers

The first electronic computers marked a revolutionary shift from mechanical to electronic systems, utilizing vacuum tubes for faster computations․ These machines, like ENIAC, paved the way for modern computing․

4․1․ ENIAC: The First Large-Scale Electronic Computer

ENIAC, developed in 1946 by John Mauchly and J․ Presper Eckert, was the first large-scale electronic computer․ It used vacuum tubes for calculations and weighed over 27 tons․ Designed for the U․S․ Army, ENIAC calculated artillery firing tables and demonstrated the potential of electronic computing․ Its invention marked a milestone, transitioning from mechanical to electronic systems and paving the way for modern computers․ ENIAC’s legacy lies in its role as a precursor to smaller, faster, and more efficient machines․ It remains a cornerstone in the history of computer development․

4․2․ UNIVAC: The First Commercial Computer

UNIVAC, released in 1951, was the first commercially available computer, marking a shift from military to business applications․ Produced by Remington Rand, it used magnetic tapes for data storage and was designed for general-purpose computing․ UNIVAC’s introduction revolutionized industries by enabling efficient data processing and decision-making․ Its success demonstrated the viability of computers in the commercial sector, paving the way for widespread adoption in business and government․ UNIVAC’s impact was significant, making it a landmark in the evolution of computing technology․

4․3․ The Role of Vacuum Tubes in Early Computers

Vacuum tubes played a crucial role in early computers, serving as electronic switches and amplifiers․ They enabled computers like ENIAC to perform calculations at unprecedented speeds․ However, their bulky size, high power consumption, and frequent failures limited early computer design․ Despite these challenges, vacuum tubes were instrumental in advancing computing technology until transistors replaced them in the late 1950s․ Their use marked a significant phase in the transition from mechanical to electronic computing, laying the groundwork for modern computer systems․

The Generations of Computers

Computers have evolved through distinct generations, each marked by technological advancements․ From vacuum tubes to microprocessors, these generations reflect significant improvements in power, size, and functionality․

5․1․ First Generation Computers (1946–1959)

The first generation of computers emerged in the mid-20th century, marked by the use of vacuum tubes․ Machines like ENIAC were large, bulky, and consumed significant power․ These early systems were prone to overheating and required extensive maintenance․ Despite their limitations, they laid the groundwork for modern computing․ Programmers used machine code and assembly languages, and data input relied on punch cards․ The first generation bridged the gap between mechanical and electronic computing, setting the stage for the rapid advancements that followed in subsequent generations․

5․2․ Second Generation Computers (1959–1965)

The second generation introduced transistors, replacing bulky vacuum tubes․ Smaller, faster, and more reliable, these computers reduced power consumption and improved performance․ IBM 1401 and PDP-8 became prominent models․ Magnetic tapes and disks emerged as storage solutions, enhancing data management․ High-level programming languages like COBOL and FORTRAN simplified software development․ This era saw computers transition from scientific tools to business applications, marking a significant shift in their utility and accessibility across industries, setting the stage for further technological advancements in the following generations․

5․3․ Third Generation Computers (1965–1971)

The third generation marked the introduction of integrated circuits, combining multiple transistors on a single chip․ This led to smaller, faster, and more efficient computers․ IBM System/360 and PDP-11 were notable models․ Operating systems became more advanced, enabling multitasking and time-sharing․ High-level programming languages like C emerged, simplifying software development․ Magnetic Winchester disks improved storage capabilities․ This era bridged the gap between large mainframes and smaller, more accessible systems, paving the way for the microprocessor revolution in the next generation․

5․4․ Fourth Generation Computers (1971–1980)

The fourth generation introduced microprocessors, with Intel’s 4004 being the first․ Computers became smaller, faster, and more affordable․ High-level languages like C emerged, enhancing programming efficiency․ Magnetic disks improved storage, and graphical user interfaces (GUIs) began to appear․ This era saw the rise of personal computers, including the Apple I and IBM PC, revolutionizing accessibility․ Mainframes and minicomputers also advanced, with networks and distributed systems gaining prominence․ This period laid the groundwork for the widespread adoption of computers in both professional and personal settings․

5․5․ Fifth Generation Computers (1980–Present)

The fifth generation ushered in the era of artificial intelligence and machine learning․ Computers became exponentially faster and smaller, driven by advancements in microprocessors․ Graphical user interfaces (GUIs) became standard, making computers more accessible․ The rise of the internet and mobile devices transformed connectivity․ Cloud computing emerged, enabling data storage and processing over networks․ Modern technologies like quantum computing and the Internet of Things (IoT) are shaping this generation․ These advancements have revolutionized industries, enabling unprecedented capabilities in fields like healthcare, education, and entertainment․

Key Inventions in Computer History

Pivotal innovations like the microprocessor, integrated circuits, and the World Wide Web revolutionized computing, enabling faster, smaller, and more connected systems that transformed modern life․

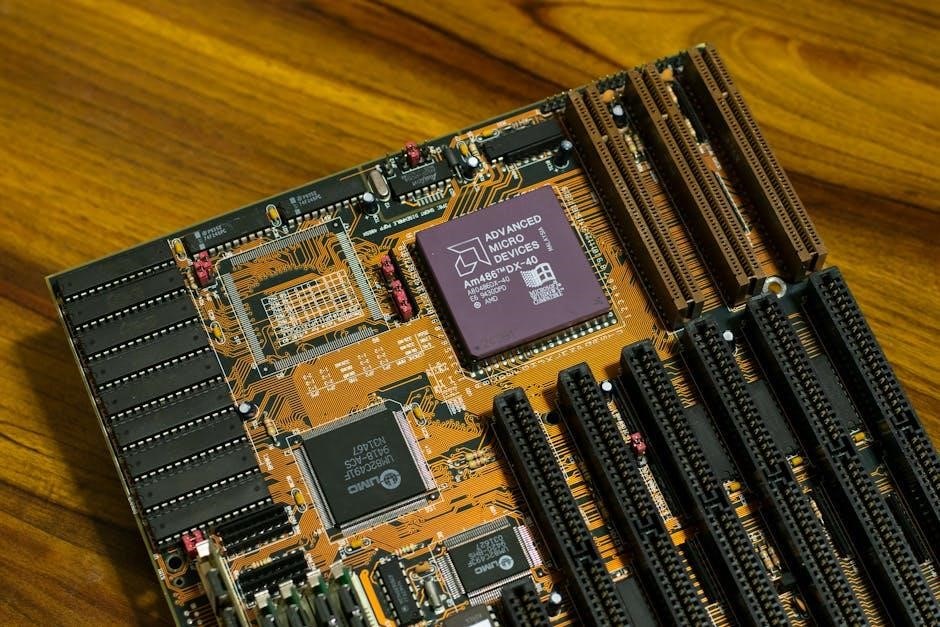

6․1․ The Invention of the Microprocessor

The invention of the microprocessor in 1971 by Ted Hoff and Stanley Mazor at Intel revolutionized computing․ This breakthrough integrated an entire CPU onto a single silicon chip, drastically reducing size and increasing efficiency․ The microprocessor enabled the development of personal computers, transforming the industry by making computing accessible and affordable․ It laid the foundation for modern computing, revolutionizing technology and paving the way for future innovations․

6․2․ The Development of the Integrated Circuit

The integrated circuit (IC) was invented in 1958 by Jack Kilby at Texas Instruments, revolutionizing electronics․ It combined multiple components like transistors and resistors on a single chip of silicon, reducing size and improving reliability․ This innovation lowered production costs and enabled the creation of smaller, faster, and more efficient devices․ The IC became a cornerstone of modern computing, driving advancements in computer design and performance․ Its development marked a pivotal moment in the history of technology, setting the stage for the rapid evolution of computers․

6․3․ The Creation of the World Wide Web

Important Figures in Computer History

Charles Babbage, Ada Lovelace, John Mauchly, J․ Presper Eckert, and Tim Berners-Lee are pivotal figures whose inventions and contributions shaped the evolution of computers and the internet․

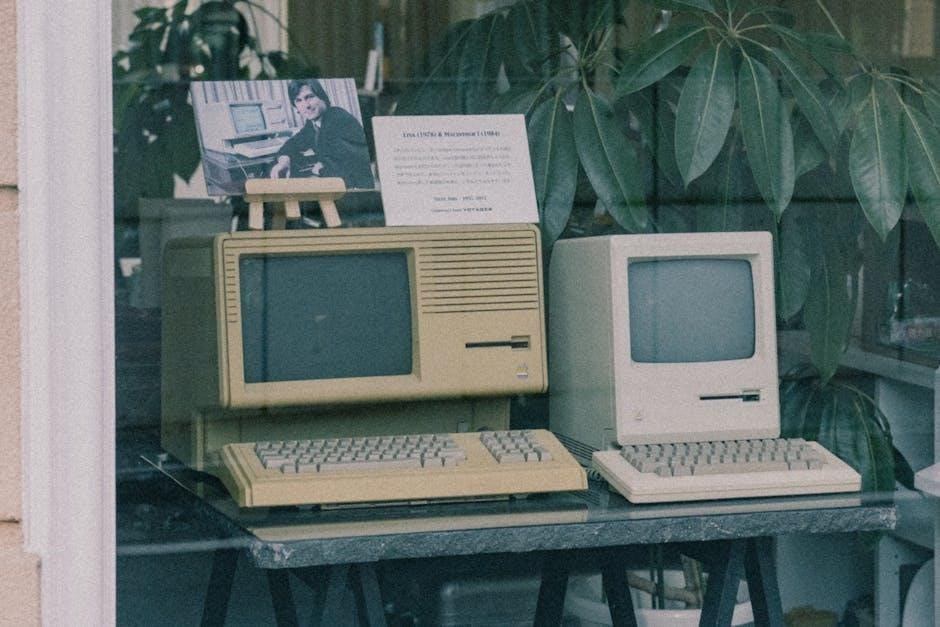

7․1․ Charles Babbage: The Father of the Computer

Charles Babbage, a British mathematician and inventor, is renowned as the “Father of the Computer” for conceptualizing the first mechanical computers․ His Difference Engine and Analytical Engine introduced programmability and automation, with the Analytical Engine featuring a central processing unit and memory․ Babbage’s vision of a machine capable of general computation laid the foundation for modern computers․ His work, though never completed in his lifetime, inspired future innovators like Ada Lovelace, who recognized the Analytical Engine’s potential for solving complex problems beyond mere calculation․

7․2․ Ada Lovelace: The First Programmer

Ada Lovelace, born Augusta Ada Byron, is celebrated as the world’s first computer programmer․ Her groundbreaking work on Charles Babbage’s Analytical Engine led to the creation of the first algorithm intended for a machine․ Lovelace’s notes included a method for calculating Bernoulli numbers, recognized as the first computer program․ She envisioned the engine’s potential beyond calculation, suggesting it could create music or art․ Her visionary insights and pioneering work in programming have left a lasting legacy in computer science and history․

7․3․ John Mauchly and J․ Presper Eckert: Creators of ENIAC

John Mauchly and J․ Presper Eckert were pioneers in computer technology, famously creating ENIAC, the first large-scale electronic digital computer․ Completed in 1945, ENIAC used vacuum tubes to perform calculations, revolutionizing computing speed and efficiency․ Their invention addressed the need for rapid calculations during World War II, particularly in breaking codes and simulating ballistic trajectories․ Mauchly and Eckert’s collaboration laid the foundation for modern computing, earning them a prominent place in the history of computer science and technology․

Evolution of Programming Languages

Programming languages evolved from basic machine code to high-level languages like COBOL and FORTRAN, advancing to modern languages such as C++ and Java, enhancing efficiency and accessibility․

8․1․ Early Programming Languages: Machine Code and Assembly

The earliest programming languages were machine code and assembly․ Machine code consists of binary instructions directly executable by a computer’s processor․ Assembly languages introduced mnemonic codes, representing machine instructions, making programming more manageable․ These low-level languages were essential for early computers, as they directly controlled hardware․ Despite their efficiency, they were time-consuming and error-prone, leading to the development of higher-level languages․ The transition from machine code to assembly marked a significant step in simplifying programming, paving the way for modern computing advancements․

8․2․ High-Level Programming Languages: COBOL and FORTRAN

COBOL and FORTRAN were pioneering high-level languages․ COBOL, developed in 1959, was designed for business applications, emphasizing readability with English-like syntax․ FORTRAN, introduced in 1957, was tailored for scientific computations, optimizing mathematical operations․ Both languages abstracted away low-level details, making programming more efficient and accessible․ COBOL’s focus on business and FORTRAN’s on science showcased versatility, setting the foundation for modern high-level languages․ Their impact revolutionized software development, enabling broader applications across industries and disciplines․

8․3․ Modern Programming Languages: C++ and Java

C++ and Java revolutionized programming by introducing object-oriented concepts․ Developed in 1985, C++ by Bjarne Stroustrup extended C with OOP features, enhancing flexibility and performance․ Java, created in 1995 by James Gosling, emphasized platform independence with “write once, run anywhere․” Both languages became cornerstone technologies, driving software development across industries․ Continuous updates, like C++11 and Java 8, have kept them relevant, ensuring their dominance in systems, web, and mobile applications, shaping modern computing landscapes and development practices․

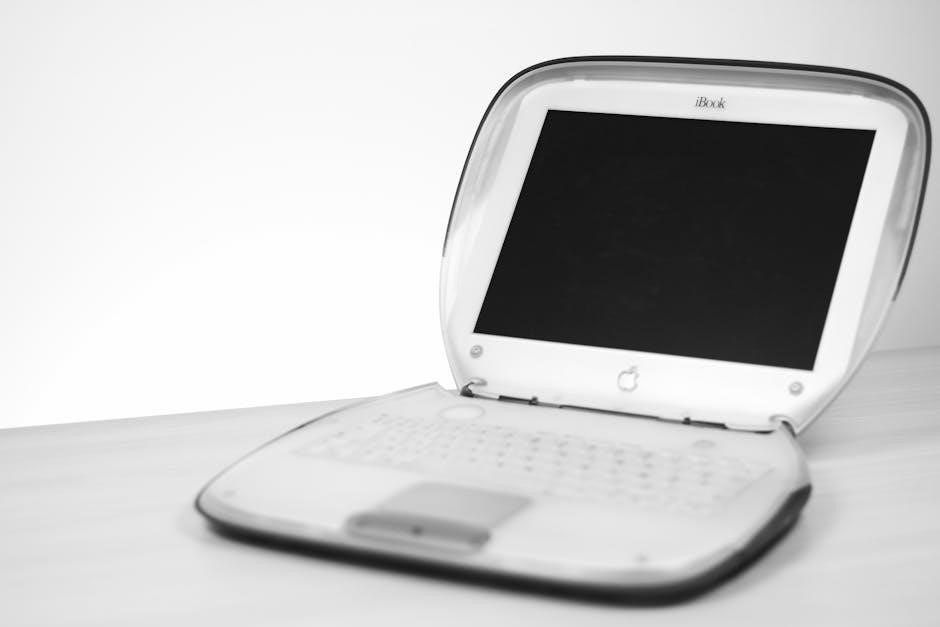

The Development of Personal Computers

The development of personal computers revolutionized technology, starting with Apple’s pioneering models and IBM’s standardized systems․ Microsoft’s GUI and software advancements democratized computing for homes and businesses globally․

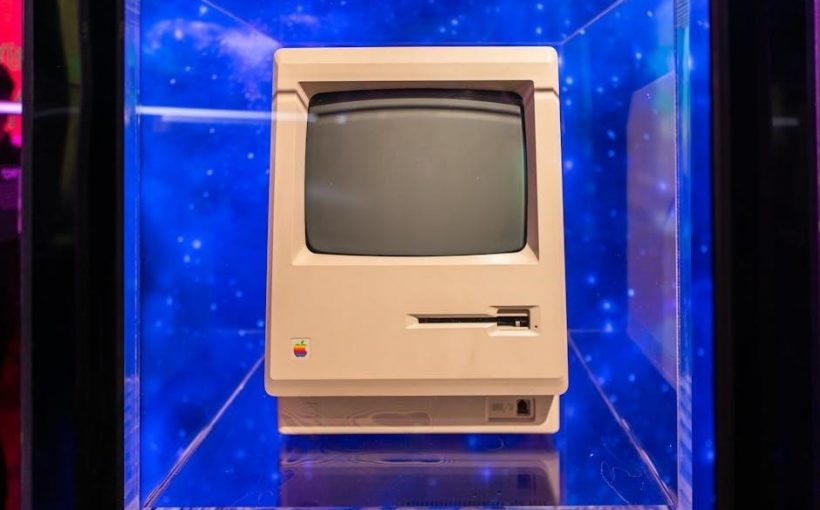

9․1․ The Apple I and the Birth of Personal Computing

The Apple I, introduced in 1976, marked a pivotal moment in personal computing․ Designed by Steve Wozniak and Steve Jobs, it was one of the first successful personal computers, featuring a fully assembled circuit board․ Priced at $666․66, it utilized the MOS Technology 6502 processor and 4KB of RAM, expandable to 48KB․ This innovation made computing accessible to individuals, sparking a revolution․ The Apple I laid the groundwork for the Apple II, further solidifying Apple’s role in the burgeoning personal computer industry․

9․2․ The IBM PC: Standardizing the Personal Computer

The IBM PC, released in 1981, revolutionized personal computing by setting an industry standard․ Based on an open architecture, it featured an Intel 8088 processor, 16KB to 256KB of RAM, and expansion slots for customization․ IBM’s standardized design allowed third-party hardware and software compatibility, fostering a vibrant ecosystem of peripherals and applications․ This approach made the IBM PC a dominant force in both business and home computing, cementing its legacy as a cornerstone of the personal computer era․

9․3․ The Rise of Microsoft and the Graphical User Interface (GUI)

Microsoft’s partnership with IBM in the early 1980s marked a pivotal moment․ Microsoft provided MS-DOS for IBM PCs, establishing itself as a software leader․ The introduction of the graphical user interface (GUI) in Windows 1․0 (1985) revolutionized user interaction, making computers more accessible․ Microsoft’s focus on user-friendly design and compatibility with various hardware propelled its dominance․ The GUI’s visual icons and menus transformed computing, enabling non-technical users to navigate systems effortlessly, thus driving widespread adoption and reshaping the personal computer landscape․

The Impact of Computers on Society

Computers have revolutionized industries, enhanced communication, and transformed daily life, driving efficiency, innovation, and global connectivity while reshaping cultural and social landscapes․

10․1․ Computers in Education and Research

Computers have transformed education and research by enabling access to vast resources, facilitating data analysis, and fostering collaboration․ They allow students to explore digital learning platforms, while researchers utilize advanced tools for simulations and experiments․ This digital integration has enhanced the quality and reach of education, making it more interactive and accessible․ Additionally, computers have accelerated scientific discoveries by processing complex data and enabling breakthroughs in various fields, from medicine to space exploration․

10․2․ Computers in Business and Industry

Computers revolutionized business and industry by automating processes, enhancing efficiency, and enabling data-driven decision-making․ Early systems like UNIVAC facilitated large-scale data processing, while advancements in programming languages such as COBOL supported complex business applications․ Computers also transformed manufacturing through automation and robotics, improving productivity and product quality․ Today, industries rely on computers for supply chain management, customer relationship systems, and financial transactions, making them indispensable tools for modern commerce and industrial operations․

10․3․ Computers in Medicine and Healthcare

Computers have transformed medicine and healthcare by enhancing diagnostic accuracy, streamlining patient care, and enabling advanced research․ Early applications included data analysis and electronic health records, improving efficiency․ Modern technologies like MRI and CT scans rely on computers for imaging, while telemedicine expands access to care․ Computers also facilitate drug development and personalized treatment plans, revolutionizing healthcare delivery and improving patient outcomes globally․

The Future of Computing

The future of computing promises exciting advancements, with technologies like quantum computing, AI, and IoT leading the way, set to revolutionize industries and daily life further․

11․1․ Artificial Intelligence and Machine Learning

Artificial Intelligence (AI) and Machine Learning (ML) are revolutionizing computing, enabling systems to learn, adapt, and make decisions autonomously․ These technologies, rooted in early computing innovations, are transforming industries by enhancing data analysis, natural language processing, and automation․ AI/ML applications, such as predictive systems and intelligent interfaces, are driving advancements in healthcare, education, and robotics․ As computing power grows, so does the potential for AI/ML to solve complex problems, making them pivotal in shaping the future of technology and society․ Their transformative potential continues to inspire innovation and exploration․

11․2․ Quantum Computing: The Next Frontier

Quantum computing represents a revolutionary leap in processing power, leveraging quantum mechanics to solve complex problems exponentially faster than classical computers․ By harnessing phenomena like superposition and entanglement, quantum systems can tackle challenges in cryptography, drug discovery, and optimization․ This technology promises to redefine industries and scientific research, offering unparalleled computational capabilities․ As quantum computing advances, it is poised to become a cornerstone of future technological progress, driving innovation and transforming the digital landscape in ways previously unimaginable․ Its potential to solve humanity’s most pressing challenges is immense and continues to inspire global research efforts․

11․3․ The Internet of Things (IoT) and Connected Devices

The Internet of Things (IoT) has revolutionized the way devices interact, enabling seamless communication and data exchange; From smart home gadgets to industrial sensors, IoT has interconnected billions of devices worldwide․ This technology enhances efficiency, automates tasks, and improves decision-making across industries․ Connected devices collect and analyze vast amounts of data, driving innovation in healthcare, agriculture, and urban planning․ As IoT continues to evolve, it promises to create a more integrated and intelligent world, transforming how we live and work in the digital age․

The Role of Computers in Modern Science

Computers are essential tools in modern science, enabling complex simulations, data analysis, and experimentation․ Supercomputers drive breakthroughs in fields like climate modeling, genomics, and particle physics․

12․1․ Supercomputers and Scientific Research

Supercomputers are pivotal in advancing scientific research, enabling simulations and data processing at unprecedented scales․ They power climate modeling, genomics, and particle physics, driving groundbreaking discoveries․ These machines handle complex calculations, from weather forecasting to materials science, accelerating innovation․ Their role in medical research, such as drug discovery, highlights their versatility․ Supercomputers also aid in understanding cosmic phenomena and optimizing engineering designs, making them indispensable tools for modern science․ Their capabilities continue to expand, fostering progress across diverse disciplines and pushing the boundaries of human knowledge․

12․2․ Computers in Space Exploration

Computers have revolutionized space exploration by enabling precise navigation, data processing, and communication․ Guidance systems rely on advanced algorithms to plot trajectories and control spacecraft․ Onboard computers manage telemetry, ensuring real-time data transmission to Earth․ They also process scientific experiments, from analyzing Martian soil to monitoring cosmic radiation․ Modern systems, like those used in NASA’s Apollo missions and the International Space Station, demonstrate their critical role․ Computers are integral to missions, facilitating historic achievements like landing rovers on Mars and exploring distant galaxies, thus advancing humanity’s understanding of the cosmos․